HOW IT WORKS

Introduction

Watch NPR’s Christian McBride talk to FarPlay co-creator Dan Tepfer about low-latency audio and real-time remote musical collaboration:

How it works

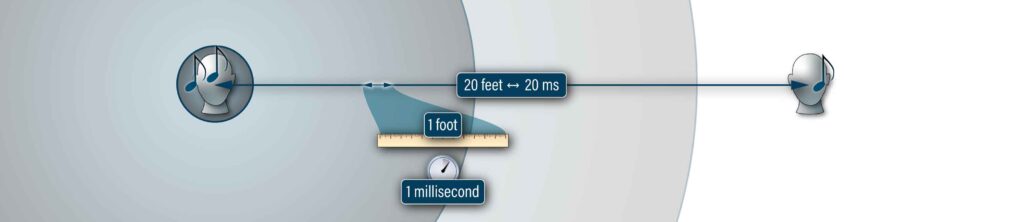

In order to play music with other people, especially in styles where rhythmic synchrony is important, it’s essential that the players hear each other as quickly as possible. The time between the moment one musician makes a sound and the moment another hears it is called latency. Even when playing together in the same room, there’s some amount of latency, because sound travels through air at about 1 foot per millisecond, meaning it takes about one thousandth of a second to travel one foot. So, if a drummer is 10ft (3m) away from a bassist in a room, there’s a one-way latency of 10ms between them, assuming they’re playing acoustically. It’s been established that musicians start to feel uncomfortable playing rhythmic music together when the one-way latency exceeds 20ms. This makes intuitive sense: it feels strange to play rhythmic music with someone 20ft (6m) away from you, while 10ft is common and perfectly fine. This is one of the reasons large orchestras need conductors.

In contrast, apps like Zoom, FaceTime, Skype or WhatsApp have latencies on the order of half a second (500ms), more than twenty times longer. Anyone who’s tried to make music through the internet using these conventional means knows it’s an exercise in frustration. So how does FarPlay reduce the latency so much? In several ways. First, FarPlay transmits completely uncompressed audio. As a result, there’s no time-consuming compression stage on the sending side, and no decompression stage on the receiving end (and as an added benefit, your audio gets transmitted in the highest-possible quality). Second, FarPlay sends audio directly between participants, from device to device, rather than passing through a third-party server. This minimizes the distance that the data has to travel. Third, FarPlay gives you complete control over the amount of buffering applied to the sound you receive.

Buffering

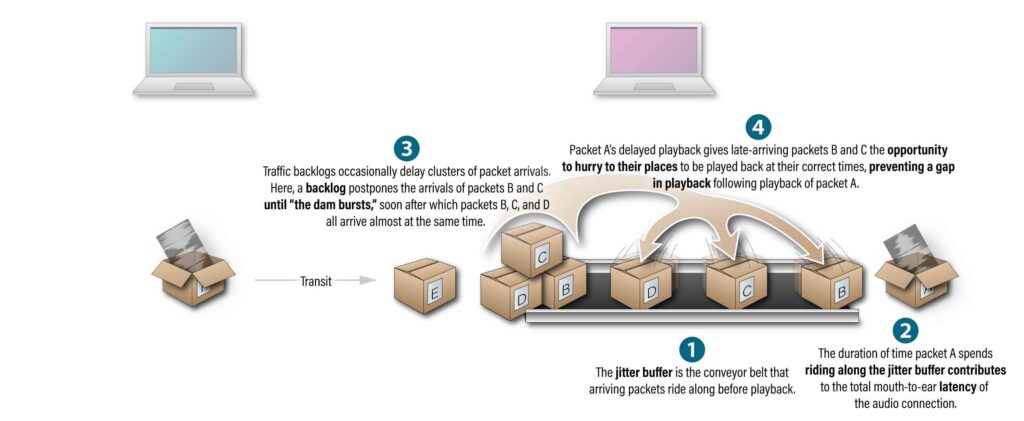

What’s buffering? It’s how long the app waits before sending the audio it’s received to your ears. Why wait at all? Because on the internet, data is sent in small chunks called packets, and the amount of time it takes for a packet to get from one place to another varies constantly due to changing traffic conditions. So, if you play a packet the instant it arrives, it’s possible that the following packet won’t have arrived by the time the first is finished playing. This would result in a glitch in the sound, a short moment of silence that sounds like static. So it’s a good idea to wait until you have a few packets stored up before playing the first one, to give yourself a little margin.

With high enough buffering, you’ll never have a glitch in the sound, but you’ll have high latency. With buffering too low, you’ll hear too much static.

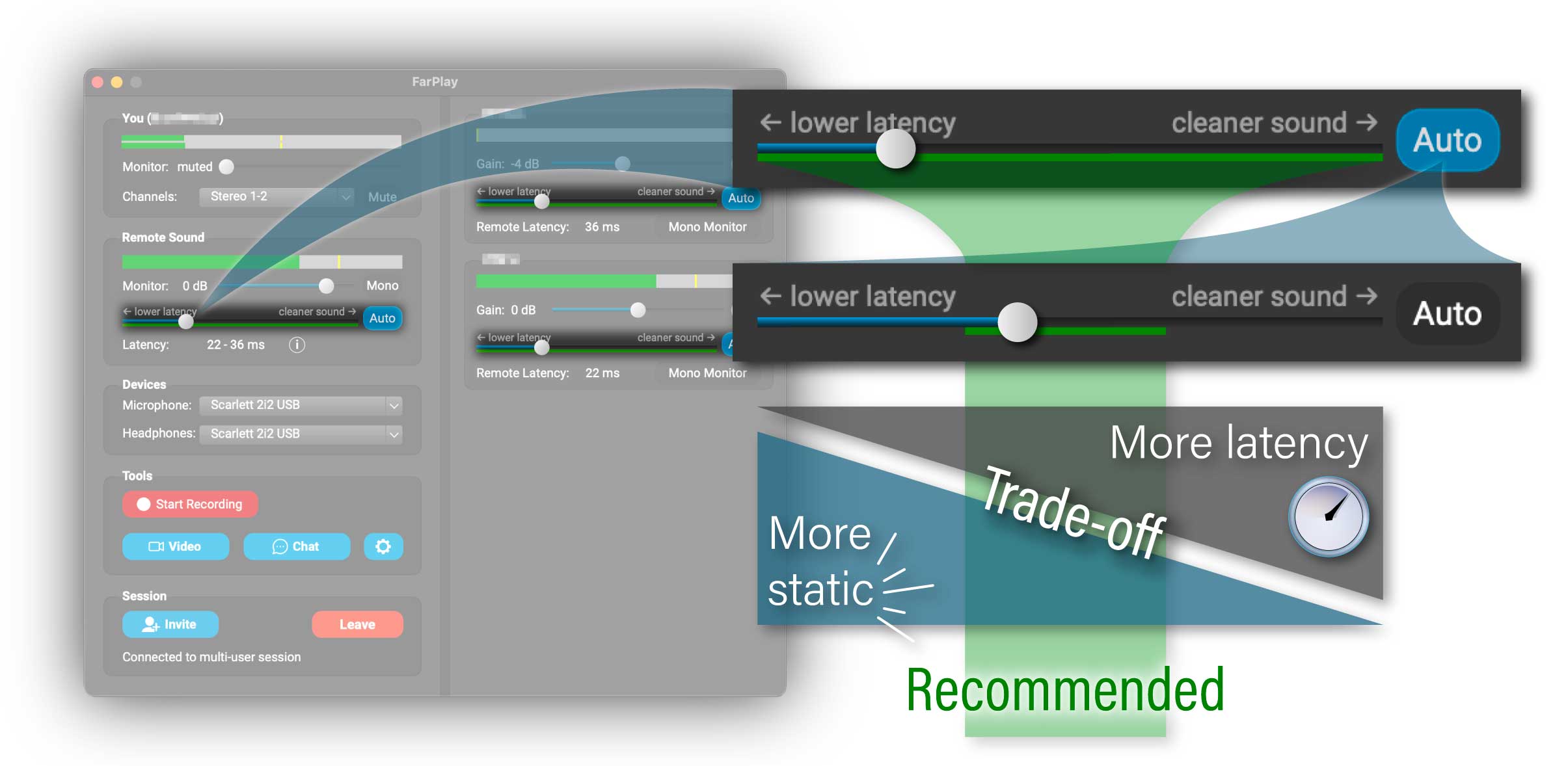

FarPlay gives you a slider that lets you fine-tune the amount of buffering, and FarPlay shows you recommended slider settings to make it even easier to find the perfect buffering sweet spot.

Learn more about what causes latency and how FarPlay helps you manage it.